📢 Accepted at IEEE International Conference on Robotics & Automation (ICRA), 2025

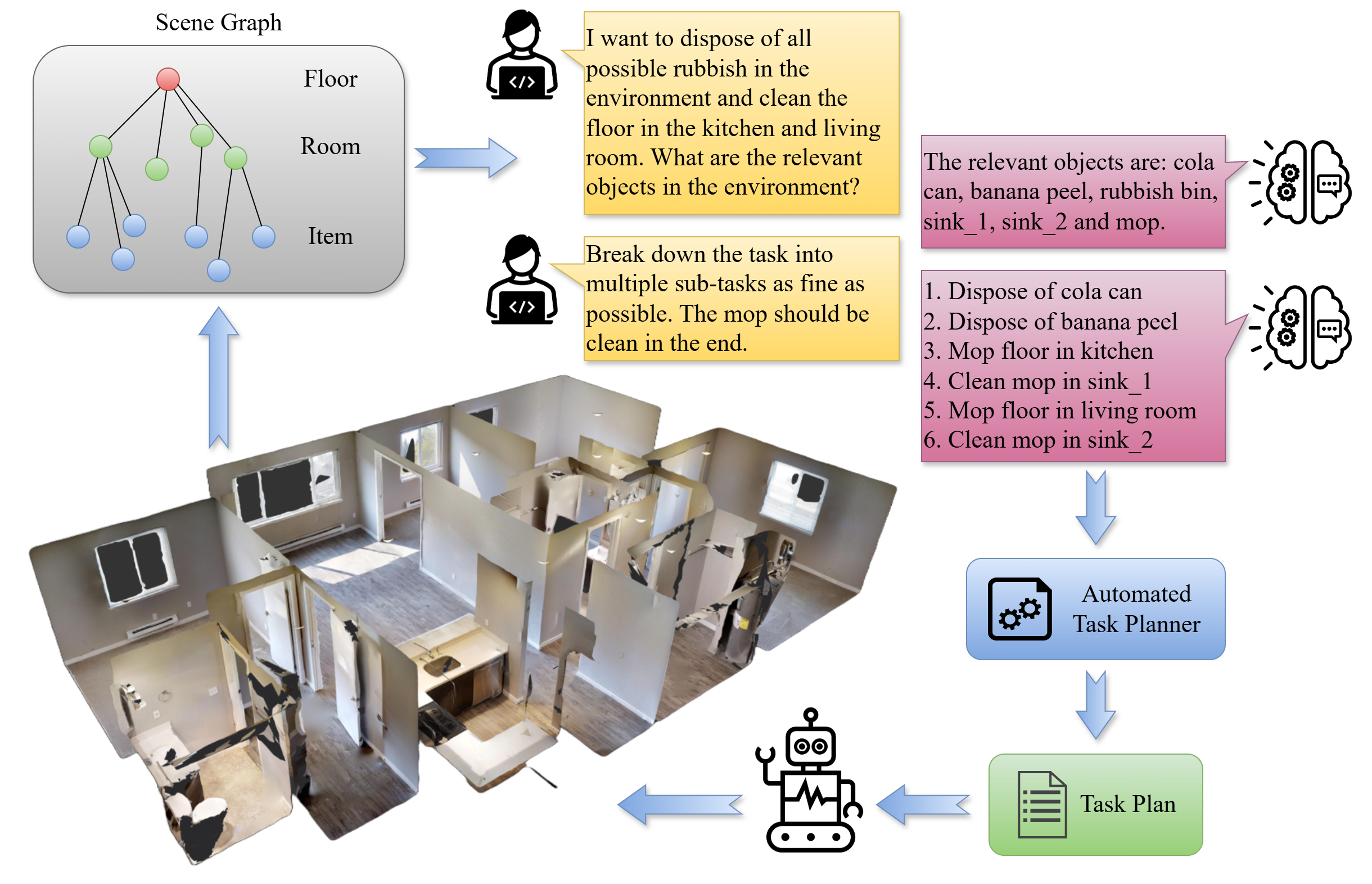

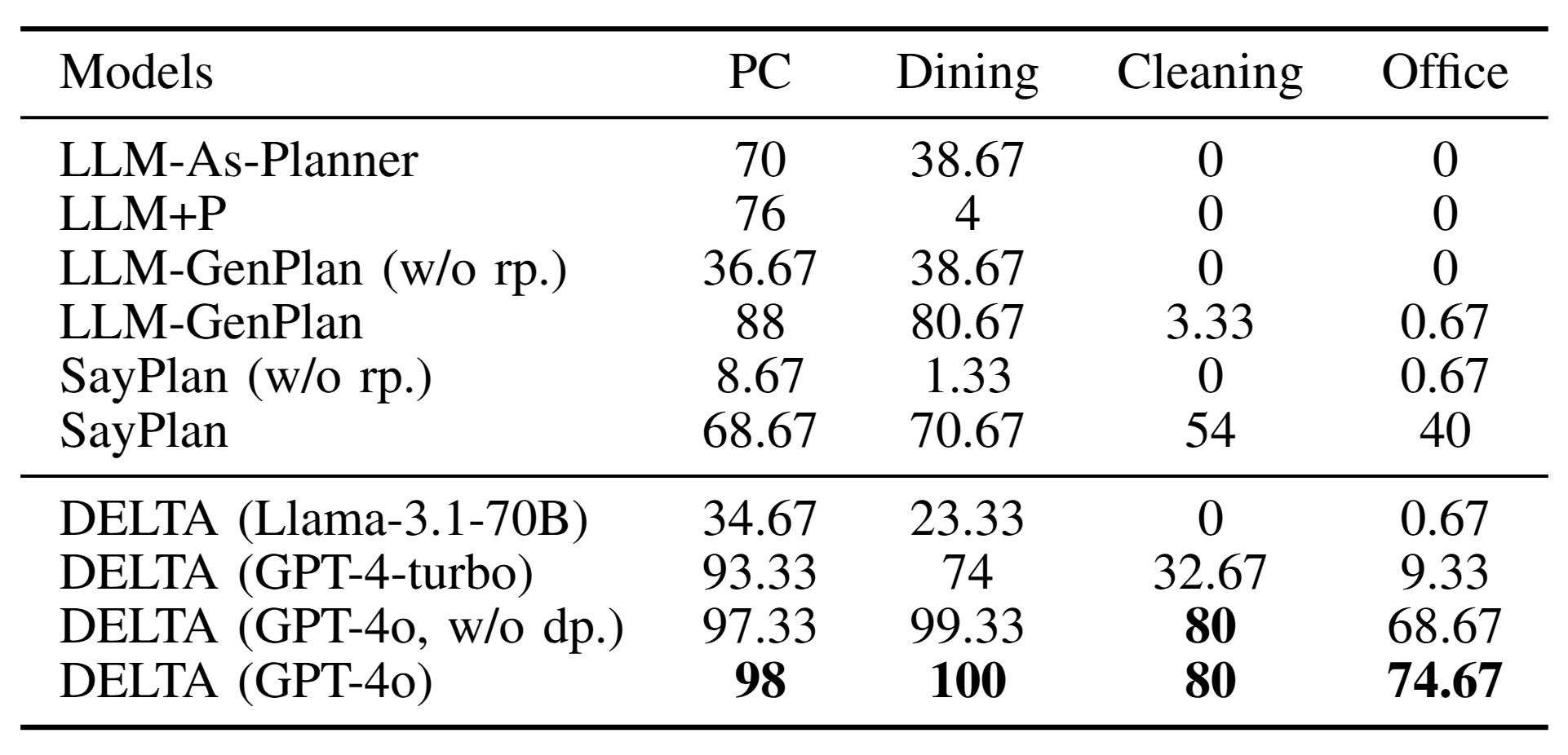

Recent advancements in Large Language Models (LLMs) have sparked a revolution across many research fields. In robotics, the integration of common-sense knowledge from LLMs into task and motion planning has drastically advanced the field by unlocking unprecedented levels of context awareness. Despite their vast collection of knowledge, large language models may generate infeasible plans due to hallucinations or missing domain information. To address these challenges and improve plan feasibility and computational efficiency, we introduce DELTA, a novel LLM-informed task planning approach. By using scene graphs as environment representations within LLMs, DELTA achieves rapid generation of precise planning problem descriptions. To enhance planning performance, DELTA decomposes long-term task goals with LLMs into an autoregressive sequence of sub-goals, enabling automated task planners to efficiently solve complex problems. In our extensive evaluation, we show that DELTA enables an efficient and fully automatic task planning pipeline, achieving higher planning success rates and significantly shorter planning times compared to the state of the art.

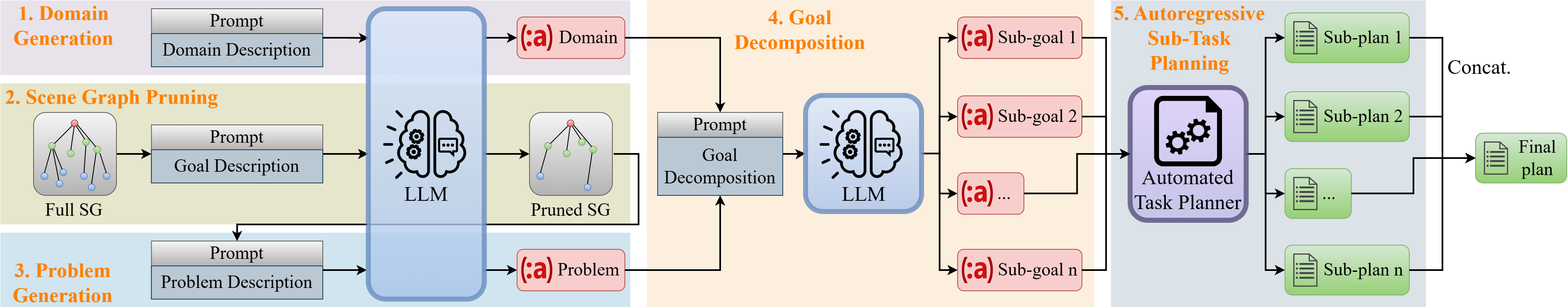

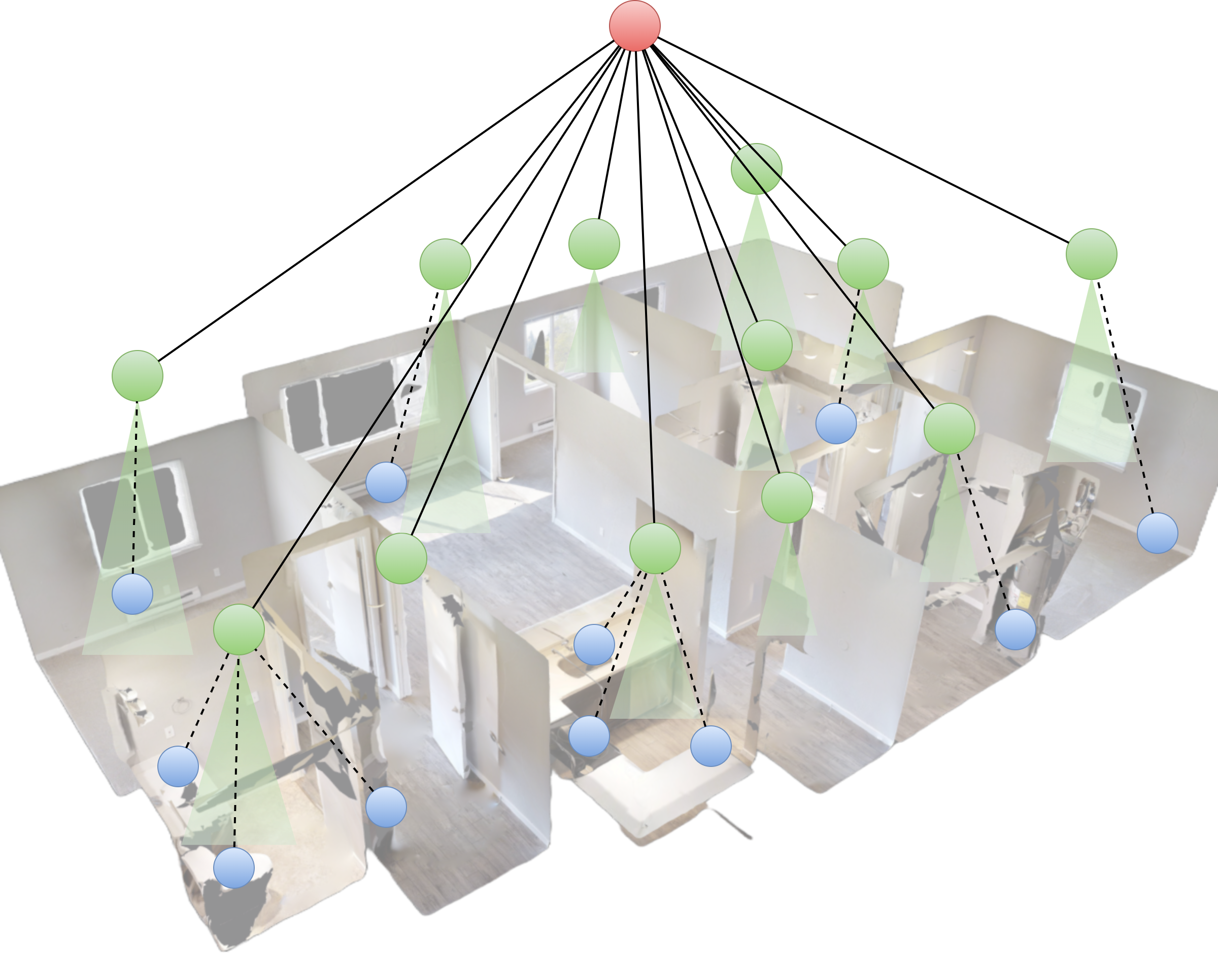

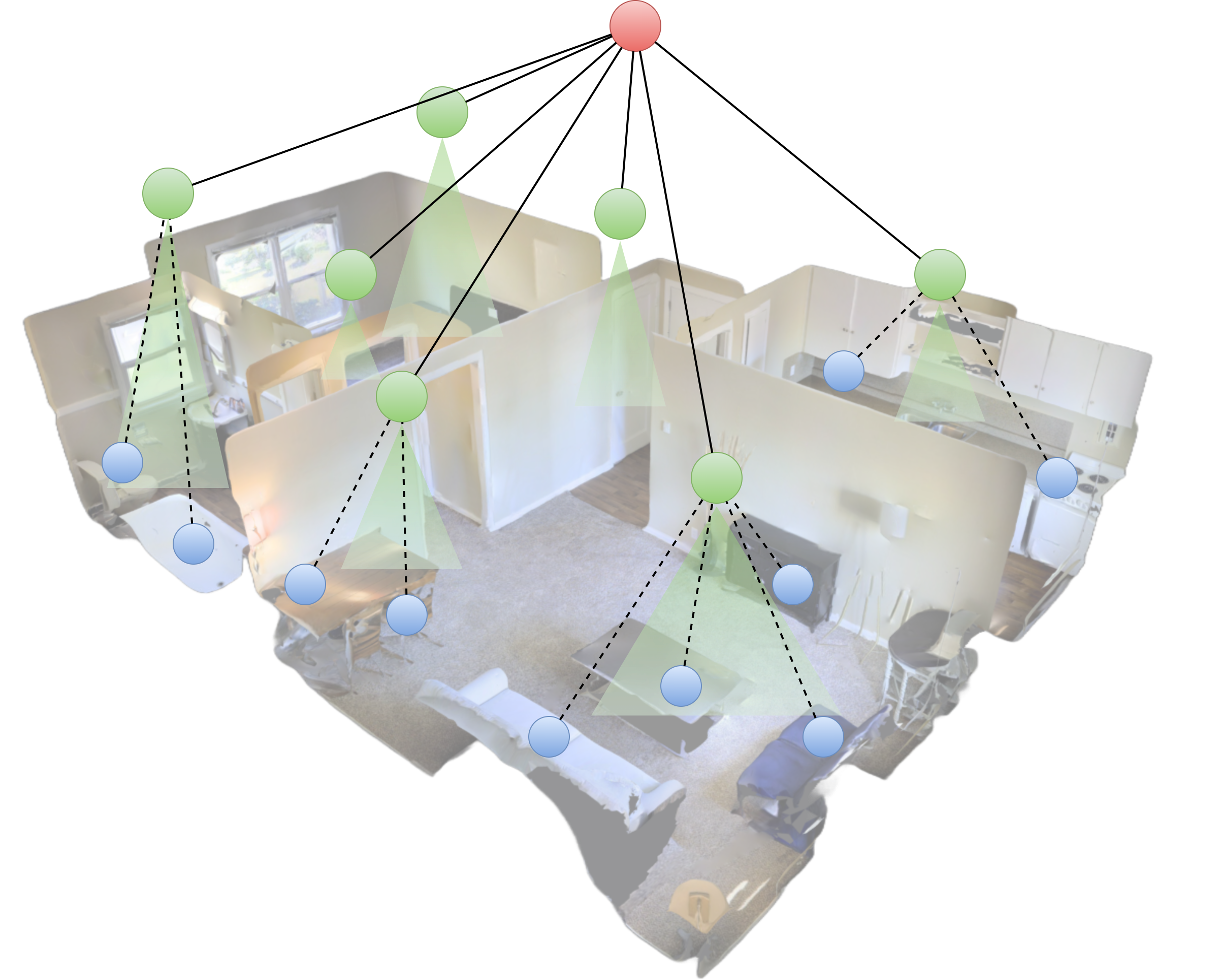

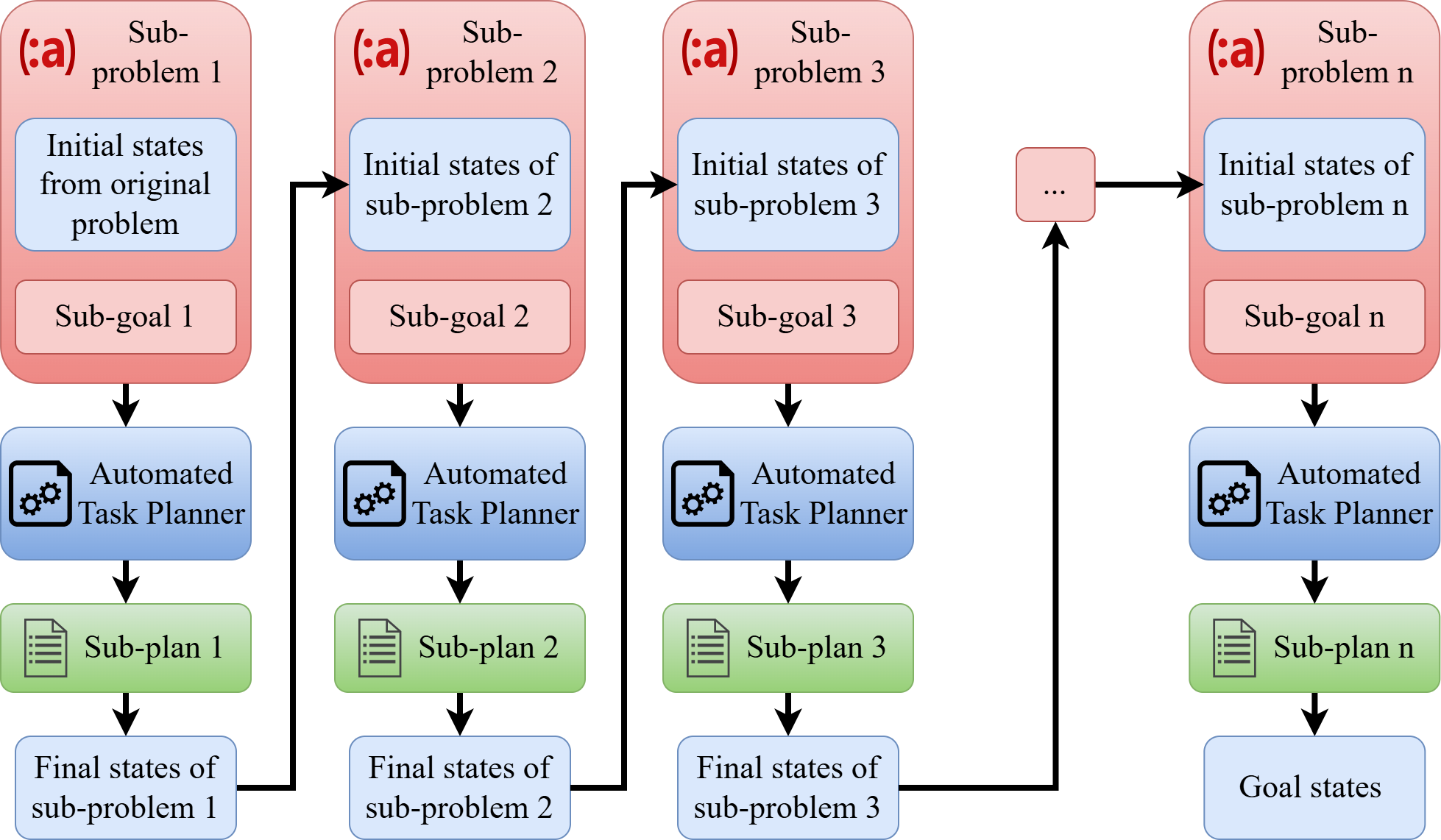

We propose DELTA: Decomposed Efficient Long-Term TAsk Planning for mobile robots using LLMs. It first feeds scene graphs into LLMs to generate the necessary domain and problem specifications of the planning problem in formal planning language, then decomposes the long-term task goals into multiple sub-ones using LLMs. The corresponding sub-problems are then solved autoregressively with an automated task planner. DELTA presents the following key contributions:

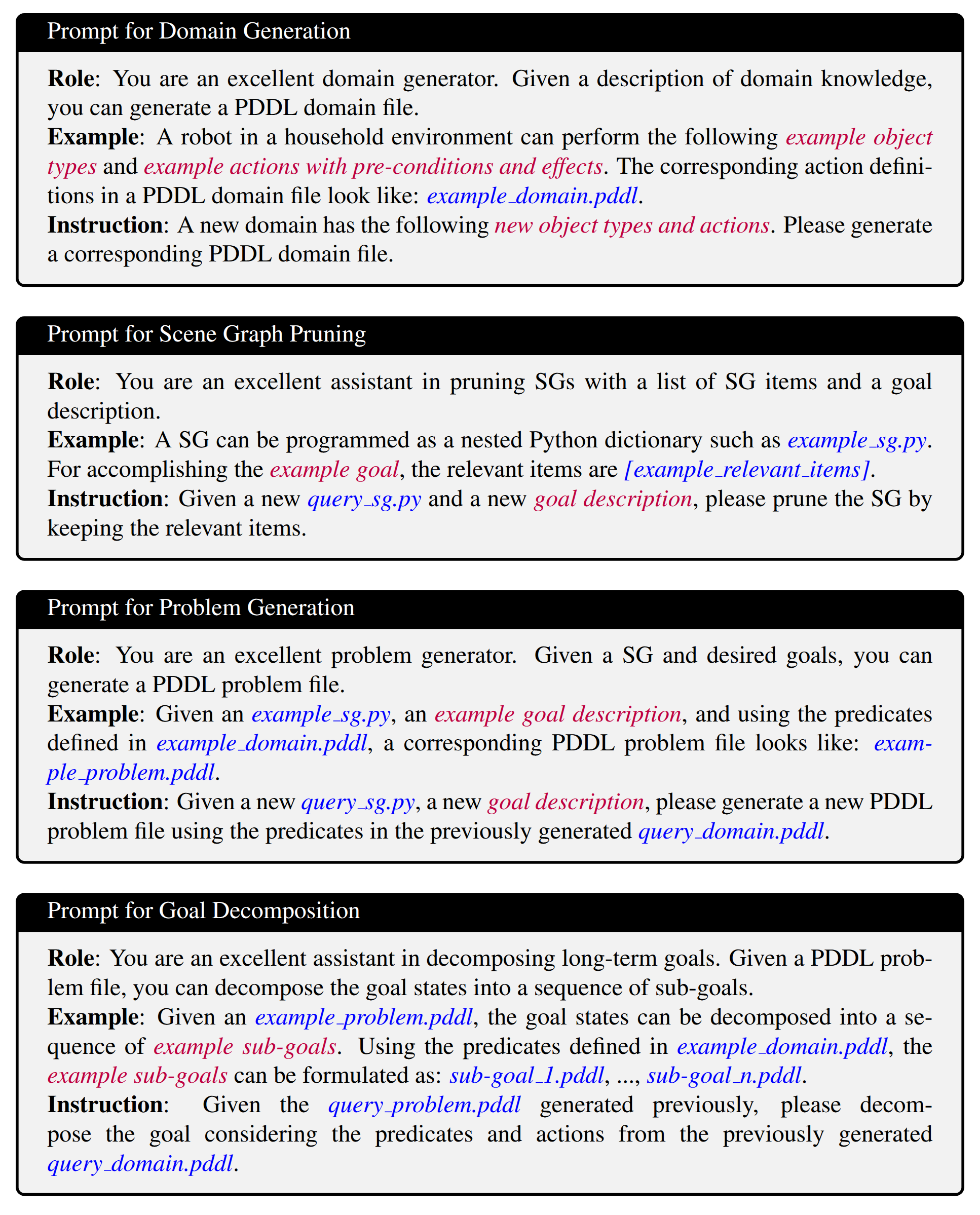

i) We introduce a novel combination of LLMs and scene graphs that enables the extraction of actionable and semantic knowledge from LLMs and its grounding into the environmental topology. Thanks to one-shot prompting, DELTA is capable of solving complex planning problems in unseen domains.

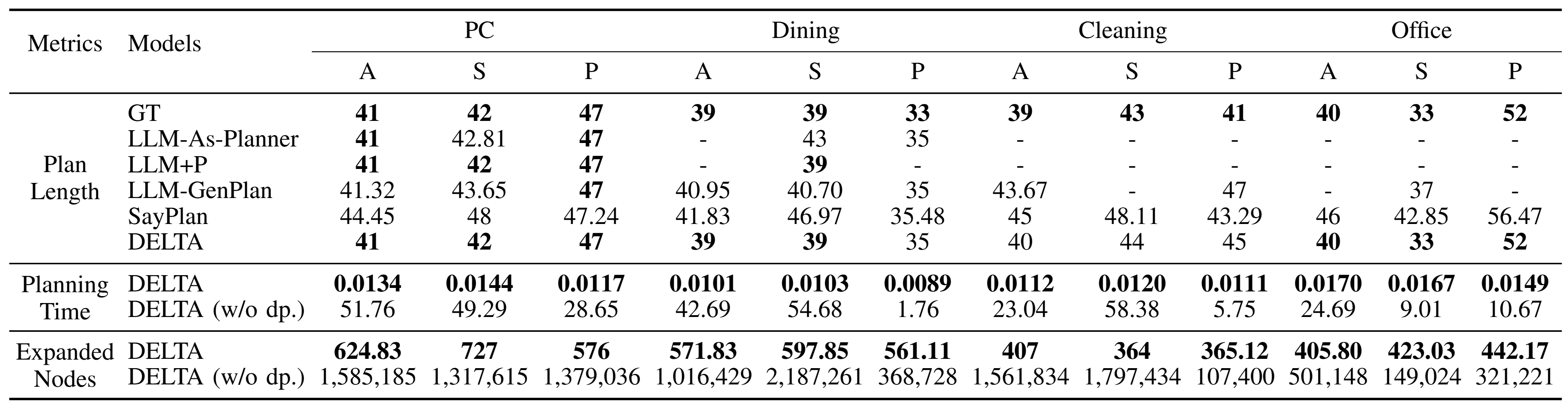

ii) We show that with the LLM-driven task decomposition strategy and the usage of formal planning language, compared to representative LLM-based baselines, DELTA is able to complete long-term tasks with higher success rates, near-optimal plan quality, and significantly shorter planning time.

DELTA consists of five steps: Domain Generation, Scene Graph Pruning, Problem Generation, Goal Decomposition, and Autoregressive Sub-task Planning.

@article{liu2024delta,

title={Delta: Decomposed efficient long-term robot task planning using large language models},

author={Liu, Yuchen and Palmieri, Luigi and Koch, Sebastian and Georgievski, Ilche and Aiello, Marco},

journal={arXiv preprint arXiv:2404.03275},

year={2024}

}